Imagine a digital entity that doesn’t just follow commands but thinks, learns, and acts autonomously. From managing your smart home to powering self-driving cars, AI agents are quietly revolutionising how we interact with technology. But what exactly makes these systems tick, and how do they bridge the gap between simple automation and truly intelligent behaviour? Let’s dive into the fascinating world of AI agents and uncover the mechanisms that drive their decision-making processes.

What is an AI Agent

This term is now everywhere. An AI agent is an autonomous computational entity designed to perceive its environment through sensors (e.g., text inputs, APIs, cameras), reason using artificial intelligence techniques, and act upon that environment via effectors (e.g., APIs, robotic arms, UI interactions) to achieve specific objectives. Unlike traditional software, AI agents operate with a degree of independence, leveraging machine learning (ML) and natural language processing (NLP) to adapt dynamically to changing conditions.

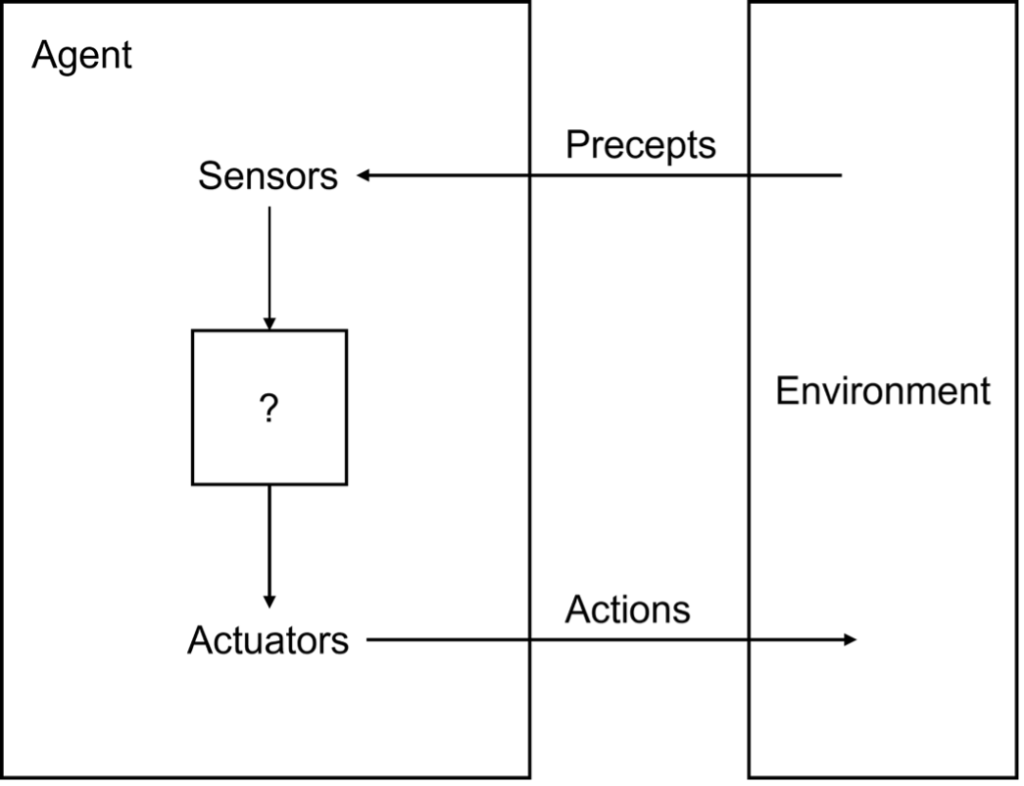

At its core, an AI agent embodies the perceive-think-act cycle. The perception phase involves ingesting data from diverse sources—text prompts, databases, or real-time sensor feeds. During reasoning, the agent processes information using LLMs or rule-based systems to make context-aware decisions. Finally, in the action phase, it executes tasks through tools or APIs, such as generating responses, updating databases, or controlling physical devices.

Consider a customer service agent that analyses a user’s query (perception), retrieves relevant information from a knowledge base (reasoning), and responds via email or escalates the issue (action). Modern agents, powered by LLMs like GPT-4o, transcend static rule-based systems by incorporating learning (e.g., reinforcement learning from human feedback) and adaptation (e.g., fine-tuning based on user interactions) [Error! Reference source not found.]. Critically, AI agents are rational: they maximise a performance metric, whether explicit (e.g., customer satisfaction scores) or learned (e.g., reward functions in reinforcement learning) [1].

Figure 1 Agents interact with environments through sensors and actuators

Traditional AI Agent Types

The foundational taxonomy of AI agents, introduced in Artificial Intelligence: A Modern Approach (Russell & Norvig, 1995), categorises agents by their decision-making sophistication and adaptability to environmental complexity [1]. This evolution from simple reactive systems to complex adaptive learners showcases the progression of AI capabilities.

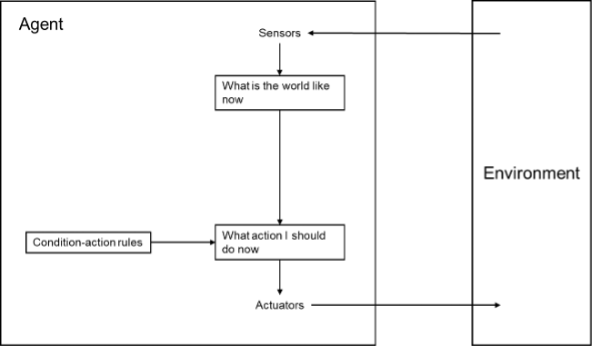

Simple reflex agents operate on condition-action rules, responding directly to current precepts without historical context. Like a thermostat activating heating below a temperature threshold, these agents excel in fully observable, deterministic environments where immediate responses suffice. While they offer rapid decision-making with minimal computational overhead, they struggle with partially observable scenarios.

Figure 2 Schematic diagram of a simple reflex agent

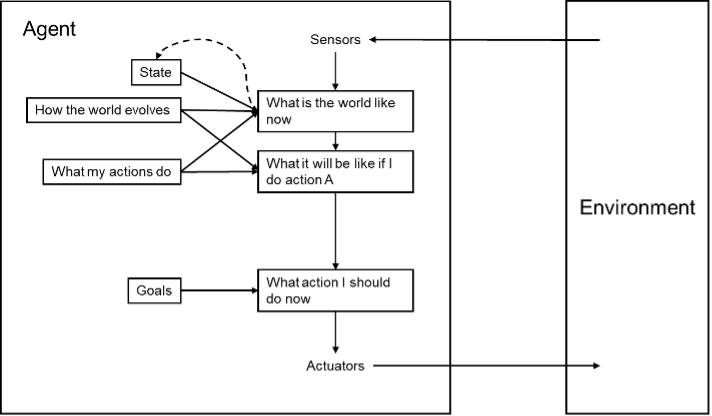

Model-based reflex agents enhance this framework by maintaining an internal environmental model. A self-driving car exemplifies this approach, using real-time camera data and preloaded maps to navigate while predicting pedestrian movements based on traffic rules and historical patterns. This state tracking enables greater adaptability but increases computational demands.

Figure 3 A model-based reflex agent

Goal-based agents introduce deliberative reasoning, planning action sequences to achieve predefined objectives. A delivery robot planning optimal routes through a warehouse demonstrates this capability, analysing layouts, package weights, and traffic conditions. These agents employ search algorithms and symbolic planners, though they can become computationally intensive in complex environments.

Figure 4 A model-based, goal-based agent

Utility-based agents further evolve this paradigm by optimising decisions through quantitative metrics. An investment bot allocating portfolios exemplifies this approach, balancing risk, return, and liquidity using Markov decision processes. These agents excel at multi-objective optimisation but require careful utility modelling.

Figure 5 A model-based, utility-based agent

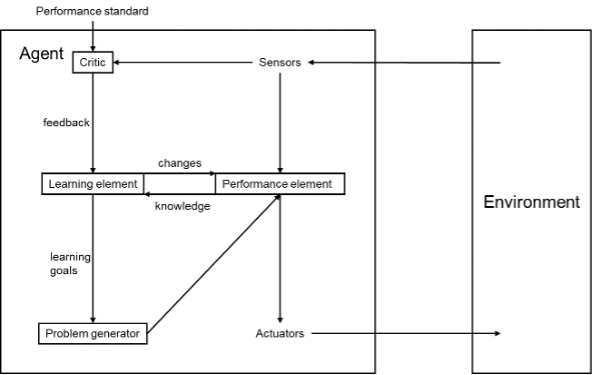

Learning agents represent the most sophisticated category, autonomously improving through feedback loops. Modern recommendation systems showcase this capability, evolving through analysis of user interactions and engagement metrics. These agents comprise a learning element, critic, performance element, and problem generator, enabling continuous adaptation to dynamic environments.

Figure 6 A general learning agent

In practice, these agent types often collaborate in multi-agent systems, where different specialised agents work together to solve complex problems. For instance, a customer service platform might combine simple reflex agents for routine queries with learning agents for complex problem-solving, coordinated by a utility-based agent that optimises overall system performance.

Anthropic’s Agentic Taxonomy

Anthropic distinguishes between two primary architectures [Error! Reference source not found.]. Workflows represent predefined LLM/tool orchestrations suitable for deterministic tasks like data entry automation. In contrast, agents are dynamic systems where LLMs self-direct tool usage, excelling at ambiguous tasks such as research synthesis or legal contract drafting.

Tool Calling: Extending Capabilities

Tools amplify agents’ effectiveness through knowledge augmentation, capability extension, and write actions. Web search APIs reduce hallucinations by grounding responses in real-time data, while specialised tools like calculators overcome LLMs’ computational limitations. While the Chameleon agent demonstrates impressive results using 13 specialised tools to outperform GPT-4 on benchmarks [Error! Reference source not found.], tool curation requires careful balance to avoid context window constraints.

Table 1 According to [5], agent tools can be categorized into 3 main types

| Tool Type | Examples | Impact |

| Knowledge Augmentation | Web search, SQL queries | Reduces hallucinations |

| Capability Extension | Calculators, code executors | Overcomes LLM math/coding limits |

| Write Actions | Email APIs, database updates | Enables end-to-end automation |

Workflow Patterns

Modern agent workflows extend beyond simple sequential execution to handle complex control flows [Error! Reference source not found., Error! Reference source not found.].

Prompt chaining forms the foundation, breaking tasks into sequential steps with validation gates between them—ideal for tasks like generating content then translating it.

Figure 7 Prompt chaining (sequential)

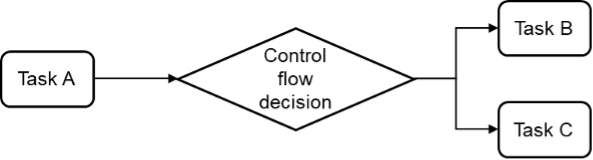

Routing implements conditional logic, directing inputs to specialised handlers based on classification, such as routing complex queries to more capable models while handling simple ones with lighter models.

Figure 8 Routing (if conditions)

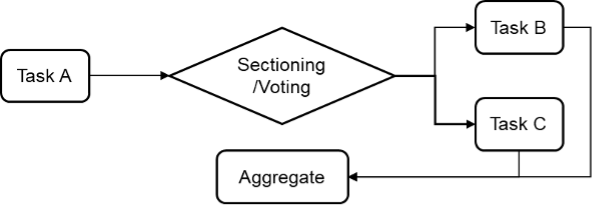

Parallel workflows manifest in two forms: sectioning (breaking tasks into concurrent subtasks) and voting (running multiple instances for consensus). For instance, code review might employ multiple specialised agents to simultaneously evaluate different security aspects. The orchestrator-workers pattern introduces dynamic task decomposition, where a central agent breaks down complex problems and coordinates specialised workers—particularly valuable in tasks like multi-file code modifications where subtasks can’t be predetermined.

Figure 9 Parallel workflow

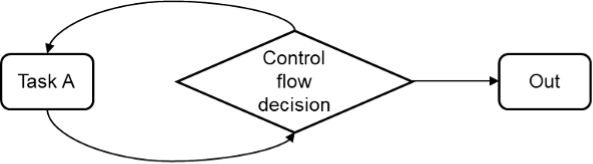

The evaluator-optimiser pattern implements feedback loops, where one agent generates solutions while another provides iterative critique, like human review processes. This approach particularly shines in tasks requiring nuanced refinement, such as literary translation or complex research synthesis [Error! Reference source not found.]. These patterns can be combined and nested to create sophisticated workflows tailored to specific use cases, though increased complexity demands careful attention to error handling and resource management.

Figure 10 Evaluator-optimiser workflow (loop)

Agents for Open-Ended Problems

Agents excel in scenarios requiring autonomy, adaptability, and dynamic tool chaining. [Error! Reference source not found.] A critical design principle involves decoupling planning from execution to prevent resource waste and ensure effectiveness. [Error! Reference source not found.] While it’s possible to combine planning and execution in a single prompt, this approach risks executing inefficient or misaligned plans without oversight. For example, an agent tasked with market analysis might generate a thousand-step plan involving unnecessary API calls and data processing steps, consuming hours of computation time and significant costs before revealing its ineffectiveness. By separating planning from execution, each proposed plan can be validated for efficiency and alignment with goals before committing resources. This decoupling naturally leads to a multi-agent architecture with specialized components: a planner agent for generating task sequences, a validator agent for assessing feasibility and efficiency, and an executor agent for implementing validated plans with error recovery mechanisms. Indeed, most effective agent systems are inherently multi-agent, as complex workflows benefit from this separation of concerns and specialised expertise.

Implementation requires careful consideration of costs, error propagation, and safety measures. Write actions demand sandboxing and human-in-the-loop approvals, while long-running tasks must balance resource utilisation against API costs.

Conclusion

From Russell & Norvig’s theoretical frameworks to dynamic agentic systems, AI agents have evolved into versatile tools for automating complex tasks. By combining LLMs with modular tools and decoupled planning-execution architectures, modern agents address open-ended challenges—from customer service to scientific research. However, their effectiveness hinges on thoughtful tool curation, rigorous validation, and controlled autonomy. While space constraints limited our exploration of real-world deployments, multi-agent collaboration patterns, and emerging safety considerations, these topics represent critical areas for practitioners to consider when implementing agent systems. The field continues to evolve rapidly, particularly in areas of agent alignment, memory management, and scalable multi-agent architectures.

For further reading:

- https://huyenchip.com/2025/01/07/agents.html

- https://www.anthropic.com/research/building-effective-agents

- https://huggingface.co/learn/agents-course/unit0/introduction

References:

- Amazon Web Services. (2024). What are AI Agents? AWS Cloud Computing Services. Retrieved from https://aws.amazon.com/what-is/ai-agents/

- Russell, S. J., & Norvig, P. (1995). Artificial Intelligence: A Modern Approach.

- Anthropic. (2024). Building Effective Agents. Anthropic Research Blog.

- Huyen, C. (2025). AI Engineering: Building Applications with Foundation Models.